An Introduction to Retrieval-Augmented Generation Models

By Borchuluun Yadamsuren, Assistant Director of Analytics, Quinlan School of Business, Loyola University of Chicago

In the rapidly evolving landscape of artificial intelligence (AI), Retrieval-Augmented Generation (RAG) models have emerged as groundbreaking technology, offering transformative potential for various industries. By seamlessly integrating information retrieval with language generation, RAG models represent a significant advancement in the application of generative AI. This article presents an overview of the mechanics of RAG, outlines its unique advantages, and advances potential business use cases.

What is a Retrieval-Augmented Generation (RAG)?

RAG was introduced by Lewis et al.,[i] and it stands as a paradigm within the realm of large language models (LLM) by enhancing generative tasks. It is the process of optimizing the output of an LLM by referencing an authoritative knowledge base outside of its training data sources before generating a response.[ii]

LLMs are trained on vast volumes of publicly available data and use billions of parameters to generate original output for tasks like answering questions, writing articles, translating languages, and engaging in long conversations with users. However, they are not trained on private data of specific users or institutions. There is an option to fine-tune an LLM with specific data, but this process is extremely expensive. It is also hard to continually update an LLM with the latest information. In addition, it is not obvious how the LLMs produced its answer to user prompts.

RAG allows the incorporation of knowledge from external databases to overcome the deficiency of Large Language Models (LLMs) that face significant challenges such as hallucination, outdated knowledge, and nontransparent, untraceable reasoning processes. RAG enhances the accuracy and credibility of the models, particularly for knowledge-intensive tasks, and allows for continuous knowledge updates and integration of domain-specific information. It can merge LLMs’ intrinsic knowledge with the vast, dynamic repositories of external databases.[iii] This way RAG extends the already powerful capabilities of LLMs to specific domains or an organization's internal knowledge base without the need to retrain the model. Therefore, it is considered a cost-effective approach to improve LLM output so that it remains relevant, accurate, and useful in various contexts.

How does RAG work?

The RAG workflow comprises three key steps.[iii] First, the external data corpus outside of the LLM's original training data is converted into plain text and partitioned into discrete chunks. The external data can come from multiple data sources, such as APIs, databases, or document repositories. The data may exist in various formats, such as PDF, HTML, Word files, or database records. The embedding model converts data into numerical representations and stores it in a vector database. This process creates an index to store the given text chunks and their vector embeddings as key-value pairs, which allows for efficient and scalable search capabilities. This way it creates a knowledge library that the generative AI models can understand.

Second, RAG identifies and retrieves chunks based on their vector similarity to the query and indexed chunks. This step is performed based on a relevancy search. The user query is converted to a vector representation and matched with the vector databases. The relevancy is calculated and established using mathematical vector calculations and representations.

Finally, the model synthesizes a response conditioned on the contextual information gleaned from the retrieved chunks. The RAG model augments the user input prompt by adding the relevant retrieved data in context. This step uses prompt engineering techniques to communicate effectively with the LLM. The augmented prompt allows the LLMs to generate an accurate answer to user queries. These steps form the fundamental framework of the RAG process, sustaining its information retrieval and context-aware generation specialties.

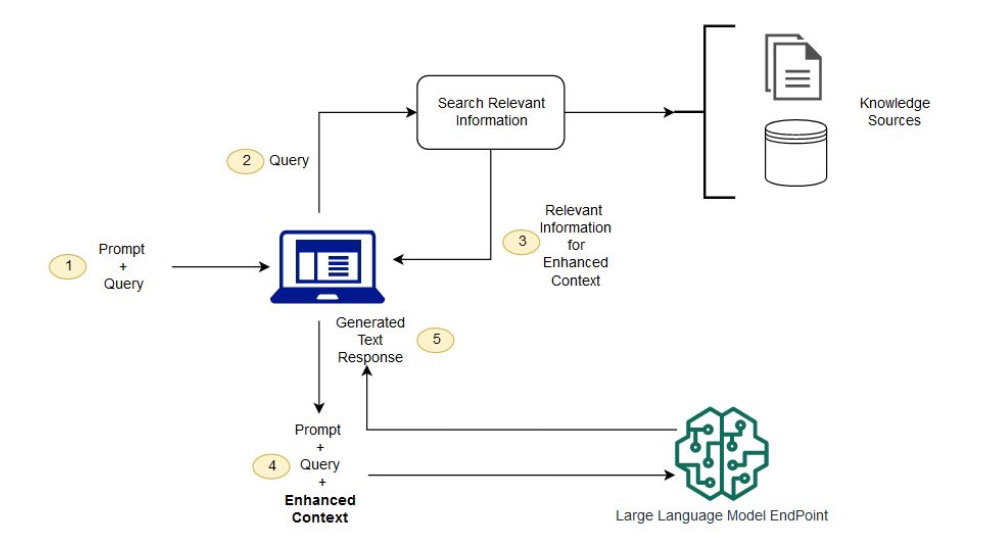

Users could inquire via Chat GPT about breaking news, such as election poll results or stock price fluctuations. Without RAG, the Chat GPT takes the user prompt and creates a response based on the information on which it was trained. Therefore, its response would be constrained by its pretraining data and lack of knowledge of recent events. RAG could address this gap by retrieving up-to-date documents from external knowledge sources, such as news websites or social media. This additional knowledge alongside the initial question is then entered into an enriched prompt that enables Chat GPT to synthesize more accurate responses. This example illustrates the RAG process (Figure 1), demonstrating its capability to enhance the LLM’s responses with real-time information retrieval.

Figure 1. RAG process (source: Amazon)

The RAG research paradigm is continuously advancing. This evolvement has been enriched through various innovative approaches addressing questions such as “what to retrieve,” “when to retrieve,” and “how to use the retrieved information.”[iii]

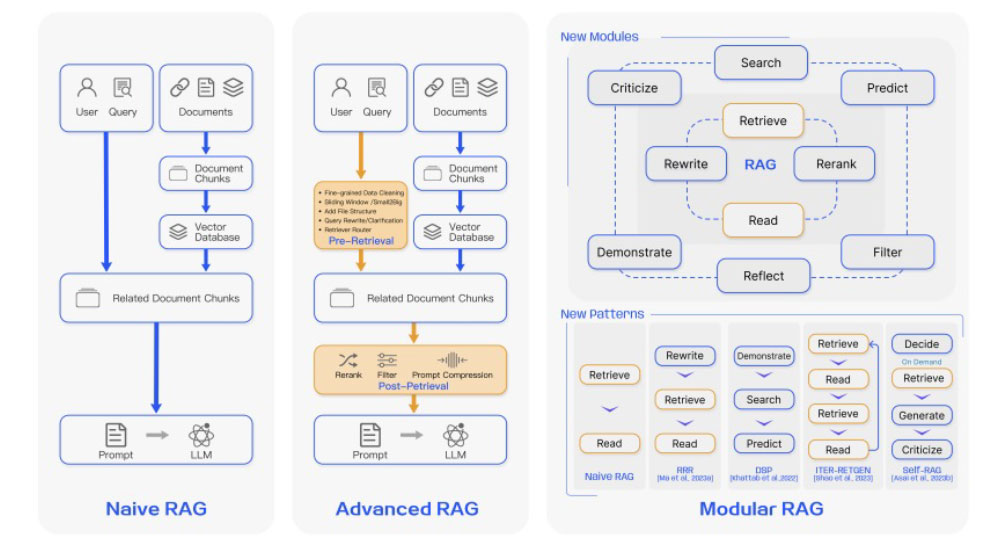

There are three types of RAG: Naive RAG, Advanced RAG, and Modular RAG. The Naive RAG research paradigm represents the earliest methodology following a traditional process that includes indexing, retrieval, and generation. This approach faces significant challenges in three key areas: retrieval, generation, and augmentation. Low precision in the retrieval phase leads to misaligned chunks and potential hallucinations. Low recall results in the failure to retrieve all relevant chunks and hinders the LLM’s ability to provide accurate responses. Response generation quality poses a hallucination problem, where the model generates answers not grounded in the given context. The augmentation process could lead to disjointed or incoherent output.

Advanced RAG has been developed to address the shortcomings of Naive RAG. This approach implements pre-retrieval and post-retrieval strategies. To improve the indexing approach, Advanced RAG employs techniques, such as sliding windows, fine-grained segmentation, and metadata.

The modular RAG provides greater versatility and flexibility by integrating various methods to enhance functional modules. This paradigm is becoming the common practice allowing for either a serialized pipeline or an end-to-end training approach across multiple modules. The comparison[iii] of these three different RAG paradigms is presented in Figure 2.

Figure 2. Comparison between the three types of RAG (source: Gao et al., 2023)

Using the RAG approach, developers can enhance the efficiency of their generative AI-based applications. It is getting easier to develop, manage, and adjust RAG-based applications for different purposes using frameworks, such as LlamaIndex and LangChain. Developers can limit access to sensitive information and make sure the applications give accurate responses. This enables organizations to use generative AI technology with more assurance for a broader range of applications.

Potential use cases of RAG

The operational mechanics of RAG models represent a significant advancement in AI's ability to process language and generate sophisticated responses. By leveraging the latest in retrieval techniques and generative modeling, RAG offers a flexible, powerful tool for a wide range of applications. Some use case examples include:

- Enhanced Customer Support: RAG can revolutionize customer service by providing real-time, accurate, and personalized responses to customer inquiries. By retrieving company-specific data, policies, and product information, RAG models can generate responses that are not only relevant but also tailored to individual customer contexts, elevating the customer support experience to new heights.

- Market Intelligence and Research: Businesses can leverage RAG for deep market analysis and research by synthesizing vast amounts of data from diverse sources. This can help companies gain insights into market trends, competitor strategies, and customer preferences, thereby informing strategic decision-making processes.

- Content Creation and Management: RAG's ability to generate coherent and contextually relevant text makes it an invaluable tool for content creation. From generating articles and reports to creating personalized marketing communications, RAG can significantly reduce the time and effort required for content production, allowing businesses to scale their content strategies efficiently.

- Personalized Recommendations: In the realm of e-commerce and content platforms, RAG models can offer highly personalized recommendations by analyzing user behavior, preferences, and feedback. By retrieving and processing user-specific data, RAG can generate suggestions that significantly enhance user engagement and satisfaction.

- Automated Data Analysis and Reporting: Businesses drowning in data can employ RAG models to automate the analysis and reporting processes. By retrieving and synthesizing information from various datasets, RAG can generate insightful, easy-to-understand reports, enabling businesses to quickly grasp complex data landscapes.

- Legal Research:[iv] Legal professionals can use RAG to quickly pull relevant case laws, statutes, or legal writings, streamlining the research process and ensuring more comprehensive legal analysis.

Conclusion

RAG represents a paradigm shift in the application of AI within the business context, offering unprecedented capabilities to enhance decision-making, customer engagement, and operational efficiency. RAG offers both accuracy and creativity that has enormous potential in business solutions. The future directions tend to evolve into a hybrid approach, combining the strengths of RAG and fine-tuning.

[i] Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., ... & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. Advances in Neural Information Processing Systems, 33, 9459-9474: link

[ii] What is Retrieval-Augmented Generation? Retrieved 03/01/2024 from: link

[iii] Gao, Yunfan, Yun Xiong, Xinyu Gao, Kangxiang Jia, Jinliu Pan, Yuxi Bi, Yi Dai, Jiawei Sun, and Haofen Wang. "Retrieval-augmented generation for large language models: A survey." arXiv preprint arXiv:2312.10997 (2023): link

[iv] Retrieval Augmented Generation: Best Practices and Use Cases, retrieved 03/01/2024 from: link

About the author

Dr. Borchuluun Yadamsuren is an Assistant Director of Analytics at the Quinlan School of Business of Loyola University of Chicago. Her current research interest is in the application and adoption of generative AI for different domains. She holds a M.A. in journalism, Ph.D. in information science and learning technologies from the University of Missouri and B.S. and M.S. in computer science from Novosibirsk State Technical University.

By Borchuluun Yadamsuren, Assistant Director of Analytics, Quinlan School of Business, Loyola University of Chicago

In the rapidly evolving landscape of artificial intelligence (AI), Retrieval-Augmented Generation (RAG) models have emerged as groundbreaking technology, offering transformative potential for various industries. By seamlessly integrating information retrieval with language generation, RAG models represent a significant advancement in the application of generative AI. This article presents an overview of the mechanics of RAG, outlines its unique advantages, and advances potential business use cases.

What is a Retrieval-Augmented Generation (RAG)?

RAG was introduced by Lewis et al.,[i] and it stands as a paradigm within the realm of large language models (LLM) by enhancing generative tasks. It is the process of optimizing the output of an LLM by referencing an authoritative knowledge base outside of its training data sources before generating a response.[ii]

LLMs are trained on vast volumes of publicly available data and use billions of parameters to generate original output for tasks like answering questions, writing articles, translating languages, and engaging in long conversations with users. However, they are not trained on private data of specific users or institutions. There is an option to fine-tune an LLM with specific data, but this process is extremely expensive. It is also hard to continually update an LLM with the latest information. In addition, it is not obvious how the LLMs produced its answer to user prompts.

RAG allows the incorporation of knowledge from external databases to overcome the deficiency of Large Language Models (LLMs) that face significant challenges such as hallucination, outdated knowledge, and nontransparent, untraceable reasoning processes. RAG enhances the accuracy and credibility of the models, particularly for knowledge-intensive tasks, and allows for continuous knowledge updates and integration of domain-specific information. It can merge LLMs’ intrinsic knowledge with the vast, dynamic repositories of external databases.[iii] This way RAG extends the already powerful capabilities of LLMs to specific domains or an organization's internal knowledge base without the need to retrain the model. Therefore, it is considered a cost-effective approach to improve LLM output so that it remains relevant, accurate, and useful in various contexts.

How does RAG work?

The RAG workflow comprises three key steps.[iii] First, the external data corpus outside of the LLM's original training data is converted into plain text and partitioned into discrete chunks. The external data can come from multiple data sources, such as APIs, databases, or document repositories. The data may exist in various formats, such as PDF, HTML, Word files, or database records. The embedding model converts data into numerical representations and stores it in a vector database. This process creates an index to store the given text chunks and their vector embeddings as key-value pairs, which allows for efficient and scalable search capabilities. This way it creates a knowledge library that the generative AI models can understand.

Second, RAG identifies and retrieves chunks based on their vector similarity to the query and indexed chunks. This step is performed based on a relevancy search. The user query is converted to a vector representation and matched with the vector databases. The relevancy is calculated and established using mathematical vector calculations and representations.

Finally, the model synthesizes a response conditioned on the contextual information gleaned from the retrieved chunks. The RAG model augments the user input prompt by adding the relevant retrieved data in context. This step uses prompt engineering techniques to communicate effectively with the LLM. The augmented prompt allows the LLMs to generate an accurate answer to user queries. These steps form the fundamental framework of the RAG process, sustaining its information retrieval and context-aware generation specialties.

Users could inquire via Chat GPT about breaking news, such as election poll results or stock price fluctuations. Without RAG, the Chat GPT takes the user prompt and creates a response based on the information on which it was trained. Therefore, its response would be constrained by its pretraining data and lack of knowledge of recent events. RAG could address this gap by retrieving up-to-date documents from external knowledge sources, such as news websites or social media. This additional knowledge alongside the initial question is then entered into an enriched prompt that enables Chat GPT to synthesize more accurate responses. This example illustrates the RAG process (Figure 1), demonstrating its capability to enhance the LLM’s responses with real-time information retrieval.

The RAG research paradigm is continuously advancing. This evolvement has been enriched through various innovative approaches addressing questions such as “what to retrieve,” “when to retrieve,” and “how to use the retrieved information.”[iii]

There are three types of RAG: Naive RAG, Advanced RAG, and Modular RAG. The Naive RAG research paradigm represents the earliest methodology following a traditional process that includes indexing, retrieval, and generation. This approach faces significant challenges in three key areas: retrieval, generation, and augmentation. Low precision in the retrieval phase leads to misaligned chunks and potential hallucinations. Low recall results in the failure to retrieve all relevant chunks and hinders the LLM’s ability to provide accurate responses. Response generation quality poses a hallucination problem, where the model generates answers not grounded in the given context. The augmentation process could lead to disjointed or incoherent output.

Advanced RAG has been developed to address the shortcomings of Naive RAG. This approach implements pre-retrieval and post-retrieval strategies. To improve the indexing approach, Advanced RAG employs techniques, such as sliding windows, fine-grained segmentation, and metadata.

The modular RAG provides greater versatility and flexibility by integrating various methods to enhance functional modules. This paradigm is becoming the common practice allowing for either a serialized pipeline or an end-to-end training approach across multiple modules. The comparison[iii] of these three different RAG paradigms is presented in Figure 2.

Using the RAG approach, developers can enhance the efficiency of their generative AI-based applications. It is getting easier to develop, manage, and adjust RAG-based applications for different purposes using frameworks, such as LlamaIndex and LangChain. Developers can limit access to sensitive information and make sure the applications give accurate responses. This enables organizations to use generative AI technology with more assurance for a broader range of applications.

Potential use cases of RAG

The operational mechanics of RAG models represent a significant advancement in AI's ability to process language and generate sophisticated responses. By leveraging the latest in retrieval techniques and generative modeling, RAG offers a flexible, powerful tool for a wide range of applications. Some use case examples include:

- Enhanced Customer Support: RAG can revolutionize customer service by providing real-time, accurate, and personalized responses to customer inquiries. By retrieving company-specific data, policies, and product information, RAG models can generate responses that are not only relevant but also tailored to individual customer contexts, elevating the customer support experience to new heights.

- Market Intelligence and Research: Businesses can leverage RAG for deep market analysis and research by synthesizing vast amounts of data from diverse sources. This can help companies gain insights into market trends, competitor strategies, and customer preferences, thereby informing strategic decision-making processes.

- Content Creation and Management: RAG's ability to generate coherent and contextually relevant text makes it an invaluable tool for content creation. From generating articles and reports to creating personalized marketing communications, RAG can significantly reduce the time and effort required for content production, allowing businesses to scale their content strategies efficiently.

- Personalized Recommendations: In the realm of e-commerce and content platforms, RAG models can offer highly personalized recommendations by analyzing user behavior, preferences, and feedback. By retrieving and processing user-specific data, RAG can generate suggestions that significantly enhance user engagement and satisfaction.

- Automated Data Analysis and Reporting: Businesses drowning in data can employ RAG models to automate the analysis and reporting processes. By retrieving and synthesizing information from various datasets, RAG can generate insightful, easy-to-understand reports, enabling businesses to quickly grasp complex data landscapes.

- Legal Research:[iv] Legal professionals can use RAG to quickly pull relevant case laws, statutes, or legal writings, streamlining the research process and ensuring more comprehensive legal analysis.

Conclusion

RAG represents a paradigm shift in the application of AI within the business context, offering unprecedented capabilities to enhance decision-making, customer engagement, and operational efficiency. RAG offers both accuracy and creativity that has enormous potential in business solutions. The future directions tend to evolve into a hybrid approach, combining the strengths of RAG and fine-tuning.

[i] Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., ... & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. Advances in Neural Information Processing Systems, 33, 9459-9474: link

[ii] What is Retrieval-Augmented Generation? Retrieved 03/01/2024 from: link

[iii] Gao, Yunfan, Yun Xiong, Xinyu Gao, Kangxiang Jia, Jinliu Pan, Yuxi Bi, Yi Dai, Jiawei Sun, and Haofen Wang. "Retrieval-augmented generation for large language models: A survey." arXiv preprint arXiv:2312.10997 (2023): link

[iv] Retrieval Augmented Generation: Best Practices and Use Cases, retrieved 03/01/2024 from: link