If You Want to Know What AI Does… Just Look at the Objective Function

By Warren B. Powell

Professor Emeritus, Princeton University, Chief Innovation Officer, Optimal Dynamics, and Executive-in-Residence, Rutgers Business School

Any time I see a reference to “AI” I find myself wondering – just what kind of “AI” are they talking about?

Any press article today uses “AI” to refer to the large language models (LLMs) that burst into the public arena in early 2023 which enables computers to produce text that sounds human. Another manifestation of the same technology can be used to produce vivid, colorful images and movies. Leaders from Elon Musk to Geoffrey Hinton have highlighted the exponential growth in the intelligence of “AI,” and warned of potential dangers to society. Even Sam Altman, CEO of OpenAI, which was the first to release their LLM to the market, has urged that care has to be used.

Artificial intelligence was first introduced in the 1950s and has been used to describe a variety of methods that make a computer seem intelligent. LLMs are simply the latest fad and are hardly the highest level of intelligence. It is important to understand, and appreciate, the different flavors of AI, all of which represent powerful technologies that can offer significant benefits.

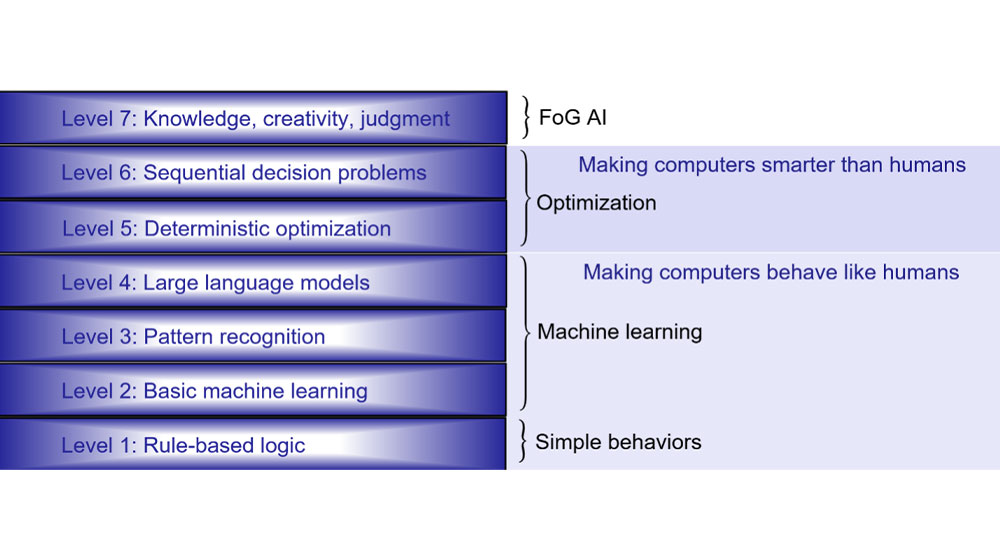

To help organize this discussion, I have identified seven levels of AI as summarized in Figure 1 (taken from [1]). The first four can all be described as making computers behave like humans, a goal which computer scientists have long described as the pinnacle of achievement for intelligence. Levels 5 and 6 describe technologies that aspire to make computers smarter than humans. And then there is level 7 (stay tuned).

Figure 1: The Seven Levels of AI

Level 1 includes rule-based logic that first emerged in the 1950s (when computers were just emerging), but grew to prominence in the 1960s and 70s as computers were showing their real potential. Level 1 could take basic logic problems such as what we are eating for dinner and output a wine recommendation. However, it did not scale to real-world problems such as recommending medical treatments. By the 1980s, “AI” was viewed as a complete failure. What it failed to do was take over the world, but rule-based systems are in widespread use today.

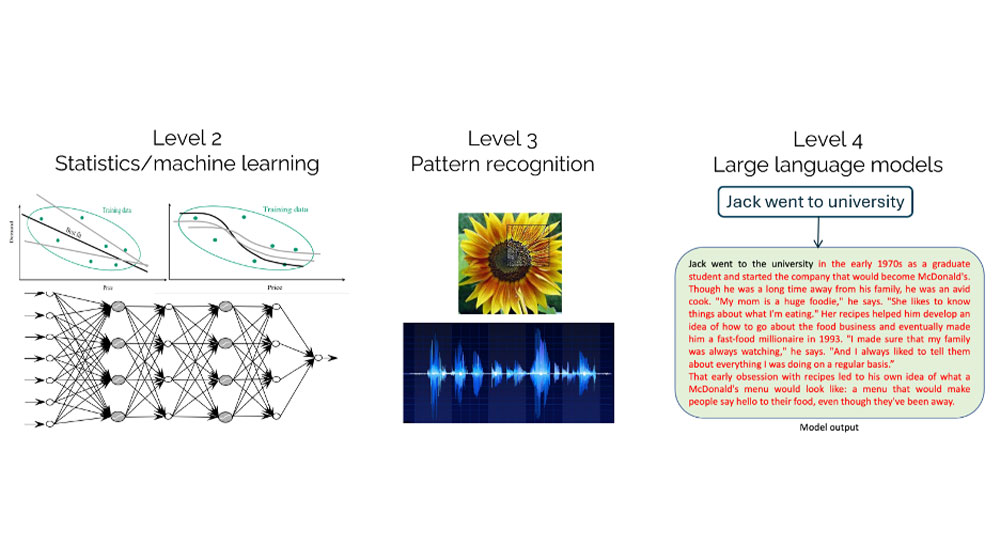

Levels 2, 3, and 4 are all a form of supervised machine learning. Level 2 is what we used to call statistical modeling, where we would use data to train linear or nonlinear models, even early neural networks. Level 3 describes the first emergence of deep neural networks (circa 2010) to perform pattern recognition – what face, what plant, what is the voice saying, what does the handwriting mean? This produced another surge of interest in “AI,” with similar expectations to the initial rule-based systems. Again, this form of AI never met expectations, but it remains a powerful and widely used tool.

Level 4 represents the large language models that emerged in 2023. ChatGPT, developed by OpenAI, was the first to be announced, but many others quickly followed. The LLMs are just another form of deep neural network, but they are much larger than the deep neural networks used for pattern recognition. In addition, LLMs require significant preprocessing of text. Otherwise, they work just like any statistical model where inputs (a sequence of words) are used to “predict” an output (the next word or phrase).

Figure 2: Levels 2-4

It is important to recognize that levels 2, 3, and 4 all involve designing some function f(x|θ). f(x|θ) is a way to express how the probability of x depends on the parameter θ. θ represents the unknown parameters that we aim to estimate from the data. Specifically, f(x|θ) is parameterized by a vector θ which is chosen so that given a set of inputs xn (historical demands, images, or snippets of text), the function f(xn|θ) most closely matches an output (or response or label) yn (observed demands, the name of an image, the word that follows an input sequence of words). Most of the time, a dataset of inputs and outputs (xn, yn), n=1, ..., N is used to find the best set of parameters θ that solves the optimization problem:

Implicit in our minimization is the search over types of functions, as well as an tunable parameters θ. The function can be linear in θ (linear regression), or any of a number of nonlinear models, including neural networks. Deep neural networks for image processing can have tens to hundreds of millions of parameters θ, while large language models can have tens of billions to over a trillion parameters, making the functions f(x|θ) extremely expensive to compute.

Implicit in our minimization is the search over types of functions, as well as an tunable parameters θ. The function can be linear in θ (linear regression), or any of a number of nonlinear models, including neural networks. Deep neural networks for image processing can have tens to hundreds of millions of parameters θ, while large language models can have tens of billions to over a trillion parameters, making the functions f(x|θ) extremely expensive to compute.

It is important to remember that the objective of any (supervised) machine learning model is to minimize the difference between a function f(xn|θ) and the response (label) yn. This means that all these deep neural networks will never be able to pursue any objective such as minimizing cost, maximizing revenue, minimizing manufacturing losses, or maximizing lives saved (or lost). While there are many claims that an LLM is exhibiting reasoning, it is not, because a neural network (or any machine learning model) is unable to pursue any objective other than matching the training dataset.

When ChatGPT first appeared, I asked it to find the shortest path from my house to the airport. It responded with something that really sounded like navigation instructions, full of left and right turns using street names that can be found in the area. The problem was that the path was nonsensical – the streets were not connected, and there was no sense of finding an efficient path. Of course, it had no way of knowing about current traffic delays.

Ask ChatGPT the same question today, and it will tell you to use a navigation system such as Google Maps. Good answer. Google Maps is a specialized technology designed to find the best path. In fact, Google Maps is an example of level 5 AI.

Level 5 AI covers a powerful library of tools known as “optimization” which are designed for static, deterministic problems. This class of technologies traces its roots to the 1950s with the emergence of linear programming which is universally written:

where we need to find a vector x that minimizes costs subject to constraints that are typically written:

where we need to find a vector x that minimizes costs subject to constraints that are typically written:

Ax = b

x ≥ 0

Linear programming laid the foundation for modern optimization by setting the framework for how these models are written, with an objective function (2), and constraints (3)-(4) that capture the physics of the problem in the form of the matrix A and the constraint vector b. The objective function can be designed to minimize costs, inventories, or lives lost, or maximize profits, demand covered, and number of people who are vaccinated. What is important is that we get to choose the objective (in fact, we must choose the objective).

Note that our machine learning models (and I mean all machine learning models) lack an objective function that reflects the goals of a specific problem, or the ability to express the physics of the problem (as we do with our constraints (3)-(4)). Empowered with this information, powerful algorithms (such as the simplex algorithm for linear programs) can produce solutions that outperform what a human can do. Level 6 covers an extraordinarily rich class of problems known as sequential decision problems which all consist of the sequence:

Decision, information, decision, information, …

Each time we make a decision xt, we use information in a state variable St (think of St as every piece of information in a database) after which new information arrives that we call W(t+1) (customer demands, shipping delays, changes in market conditions) which we did not know when we made our decision xt. We evaluate our performance at each point in time with a metric C(St, xt) which we may maximize or minimize. The state variable evolves according to a state transition model that we express using:

Our state transition model SM (⋅) is where we put all the physics of the problem. State transition models can be a single equation to update inventories, or tens of thousands (even tens of millions) of equations that capture the updating of every piece of information in a database. Decisions are made with a method we call a policy which is a function that we denote Xπ (St |θ) which depends on the information in St, and often depends on parameters θ that need to be tuned. Just as we must pick a type of function f(x|θ) when we were solving machine learning problems, we also have to choose a type of function for making decisions. Then we have to tune θ just as we did for our machine learning problems. Finally, since the information sequence Wt is random (for example, we do not know sales, or prices, or weather, in advance), we must average over all possible outcomes. This objective would be written:

Our state transition model SM (⋅) is where we put all the physics of the problem. State transition models can be a single equation to update inventories, or tens of thousands (even tens of millions) of equations that capture the updating of every piece of information in a database. Decisions are made with a method we call a policy which is a function that we denote Xπ (St |θ) which depends on the information in St, and often depends on parameters θ that need to be tuned. Just as we must pick a type of function f(x|θ) when we were solving machine learning problems, we also have to choose a type of function for making decisions. Then we have to tune θ just as we did for our machine learning problems. Finally, since the information sequence Wt is random (for example, we do not know sales, or prices, or weather, in advance), we must average over all possible outcomes. This objective would be written:

where the state variable evolves according to the state transition function in (5), and where the expectation operator E is averaging over all the possible outcomes of the information in W1, W2, ..., Wt, ..., Wt (we typically approximate this by taking a sample). As with equation (1), we are not just minimizing over our tunable parameters θ – we also have to search over different types of functions to represent our policy Xπ (St | θ). See [2] for a discussion of the different classes of policies.

where the state variable evolves according to the state transition function in (5), and where the expectation operator E is averaging over all the possible outcomes of the information in W1, W2, ..., Wt, ..., Wt (we typically approximate this by taking a sample). As with equation (1), we are not just minimizing over our tunable parameters θ – we also have to search over different types of functions to represent our policy Xπ (St | θ). See [2] for a discussion of the different classes of policies.

Sequential decision problems are astonishingly rich, but they arise throughout business and supply chain management. In fact, virtually any problem requiring that someone make a decision is a sequential decision problem, from choosing facilities and suppliers, to ordering inventory, pricing, marketing, personnel planning, and equipment scheduling.

Before we move to level 7, pause and compare the machine learning problem in equation (1) and the search for the best policy in equation (6). Both require tuning a vector of parameters. However, in machine learning we can only minimize the error between our function f(x|θ) and the label y. With sequential decision problems, we get to choose the objective, and we are also required to specify all the dynamics (physics) of the problem in the state transition function (5).

Unstated in both objectives is the need to find the best type of function. For machine learning, an analyst can choose among some combination of lookup tables, parameter functions (linear or nonlinear, including neural networks), and nonparametric functions. For sequential decision problems, an analyst needs to decide what type of function (called a policy) to make decisions. Policies can be simple order-up-to functions for inventory problems, to complex strategies that plan into the future. There are four classes of policies, the first of which includes every function that might be used in machine learning (see [P] for a quick summary, or Chapter 13 in [3] for a more complete description).

Levels 5 and 6 both offer the potential to produce decisions that outperform a human, but the price of this power is that we must provide our own objective function, and we have to fully specify the characteristics of the problem. As a result, any AI models developed for levels 5 and 6 are going to be highly specialized, just as Google Maps can only solve shortest-path problems.

So what about level 7, which I have labeled “FoG AI” (for “finger of God,” a phrase from the 1996 movie Twister)? I suspect most readers familiar with artificial intelligence have read concerns about “AI” surpassing humans in intelligence, whether it is curing cancer or guiding militaries. Visions of autonomous robots initiating attacks conjure images of machines taking over the world (the video [4] provides a vivid example).

What these visions ignore is that the tools that are actually available in the form of implementable algorithms all fall in levels 1 through 6. Most references to “AI” in the press today are all based on the apparent magic of large language models (level 4) which cannot perform planning (you cannot solve a shortest path problem with a neural network), which is a necessary skill whenever planning the movement of physical resources. Planning is possible using levels 5 and 6, but these tools are limited to very specific applications (such as solving the shortest path or planning inventories) since the physics of the underlying problem has to be hard coded into the software.

So, Level 7 remains in the realm of science fiction. No amount of training will produce a neural network that can manage a fleet of robots, since this requires an understanding of the environment, and the use of explicit objectives such as killing people or destroying machines, which has nothing to do with the objective in equation (1). This is the reason that the leading AI scientist at Meta, Yan Lecun, stated ”A cat can remember, can understand the physical world, can plan complex actions, can do some level of reasoning—actually much better than the biggest LLMs.”

This does not mean that neural networks are irrelevant. The pattern-matching capabilities of neural networks are powerful tools for understanding the environment, whether this is through statistical estimation, pattern recognition (images, voice, handwriting), and natural language processing. However, while understanding the state of the world is a critical skill, it will never provide the capabilities needed to plan more complex attacks beyond “this is the enemy so shoot” (something that is now happening in Ukraine).

In time, we will divide Level 7 into distinct levels to capture future steps. Combining the capabilities of state estimation from Levels 2, 3, and 4 with the decision-making capabilities of Levels 5 and 6 represents a natural next step. However, Levels 5 and 6 require well-defined decisions, something that does not typically exist in more complex problems. In the English language, the word “idea” often means a possible decision, which could be a new product, a marketing strategy, or a logistics concept such as just-in-time manufacturing used in Toyota City. None of the first six levels can initiate an idea.

A reason why Level 7 capabilities are likely to remain the stuff of science fiction is the same reason that most of the current surge of “AI” companies developing large language models will fail: the lack of a business purpose that will cover the cost. These tools are becoming expensive, and they can only be justified if there are economic benefits. The initial wave of LLM startups have depended on the generous support of major tech companies like Microsoft, as well as the usual crowd of investors hoping to get into the market early. Most of this money will be lost, as it has in the past. Advanced technologies need noble causes to motivate their development.

About the author

Warren B. Powell is Professor Emeritus at Princeton University, where he taught for 39 years, and is currently the Chief Innovation Officer at Optimal Dynamics. He was the founder and director of CASTLE Lab, which focused on stochastic optimization for a wide range of applications, leading to his universal framework for sequential decision problems and the four classes of policies. He has co-authored over 250 publications and five books, based on the contributions of over sixty graduate students and post-docs. He is the 2021 recipient of the Robert Herman Lifetime Achievement Award from the Society for Transportation Science and Logistics and the 2022 Saul Gass Expository Writing Award.

By Warren B. Powell

Professor Emeritus, Princeton University, Chief Innovation Officer, Optimal Dynamics, and Executive-in-Residence, Rutgers Business School

Any time I see a reference to “AI” I find myself wondering – just what kind of “AI” are they talking about?

Any press article today uses “AI” to refer to the large language models (LLMs) that burst into the public arena in early 2023 which enables computers to produce text that sounds human. Another manifestation of the same technology can be used to produce vivid, colorful images and movies. Leaders from Elon Musk to Geoffrey Hinton have highlighted the exponential growth in the intelligence of “AI,” and warned of potential dangers to society. Even Sam Altman, CEO of OpenAI, which was the first to release their LLM to the market, has urged that care has to be used.

Artificial intelligence was first introduced in the 1950s and has been used to describe a variety of methods that make a computer seem intelligent. LLMs are simply the latest fad and are hardly the highest level of intelligence. It is important to understand, and appreciate, the different flavors of AI, all of which represent powerful technologies that can offer significant benefits.

To help organize this discussion, I have identified seven levels of AI as summarized in Figure 1 (taken from [1]). The first four can all be described as making computers behave like humans, a goal which computer scientists have long described as the pinnacle of achievement for intelligence. Levels 5 and 6 describe technologies that aspire to make computers smarter than humans. And then there is level 7 (stay tuned).

Level 1 includes rule-based logic that first emerged in the 1950s (when computers were just emerging), but grew to prominence in the 1960s and 70s as computers were showing their real potential. Level 1 could take basic logic problems such as what we are eating for dinner and output a wine recommendation. However, it did not scale to real-world problems such as recommending medical treatments. By the 1980s, “AI” was viewed as a complete failure. What it failed to do was take over the world, but rule-based systems are in widespread use today.

Levels 2, 3, and 4 are all a form of supervised machine learning. Level 2 is what we used to call statistical modeling, where we would use data to train linear or nonlinear models, even early neural networks. Level 3 describes the first emergence of deep neural networks (circa 2010) to perform pattern recognition – what face, what plant, what is the voice saying, what does the handwriting mean? This produced another surge of interest in “AI,” with similar expectations to the initial rule-based systems. Again, this form of AI never met expectations, but it remains a powerful and widely used tool.

Level 4 represents the large language models that emerged in 2023. ChatGPT, developed by OpenAI, was the first to be announced, but many others quickly followed. The LLMs are just another form of deep neural network, but they are much larger than the deep neural networks used for pattern recognition. In addition, LLMs require significant preprocessing of text. Otherwise, they work just like any statistical model where inputs (a sequence of words) are used to “predict” an output (the next word or phrase).

It is important to recognize that levels 2, 3, and 4 all involve designing some function f(x|θ). f(x|θ) is a way to express how the probability of x depends on the parameter θ. θ represents the unknown parameters that we aim to estimate from the data. Specifically, f(x|θ) is parameterized by a vector θ which is chosen so that given a set of inputs xn (historical demands, images, or snippets of text), the function f(xn|θ) most closely matches an output (or response or label) yn (observed demands, the name of an image, the word that follows an input sequence of words). Most of the time, a dataset of inputs and outputs (xn, yn), n=1, ..., N is used to find the best set of parameters θ that solves the optimization problem:

Implicit in our minimization is the search over types of functions, as well as an tunable parameters θ. The function can be linear in θ (linear regression), or any of a number of nonlinear models, including neural networks. Deep neural networks for image processing can have tens to hundreds of millions of parameters θ, while large language models can have tens of billions to over a trillion parameters, making the functions f(x|θ) extremely expensive to compute.

Implicit in our minimization is the search over types of functions, as well as an tunable parameters θ. The function can be linear in θ (linear regression), or any of a number of nonlinear models, including neural networks. Deep neural networks for image processing can have tens to hundreds of millions of parameters θ, while large language models can have tens of billions to over a trillion parameters, making the functions f(x|θ) extremely expensive to compute.

It is important to remember that the objective of any (supervised) machine learning model is to minimize the difference between a function f(xn|θ) and the response (label) yn. This means that all these deep neural networks will never be able to pursue any objective such as minimizing cost, maximizing revenue, minimizing manufacturing losses, or maximizing lives saved (or lost). While there are many claims that an LLM is exhibiting reasoning, it is not, because a neural network (or any machine learning model) is unable to pursue any objective other than matching the training dataset.

When ChatGPT first appeared, I asked it to find the shortest path from my house to the airport. It responded with something that really sounded like navigation instructions, full of left and right turns using street names that can be found in the area. The problem was that the path was nonsensical – the streets were not connected, and there was no sense of finding an efficient path. Of course, it had no way of knowing about current traffic delays.

Ask ChatGPT the same question today, and it will tell you to use a navigation system such as Google Maps. Good answer. Google Maps is a specialized technology designed to find the best path. In fact, Google Maps is an example of level 5 AI.

Level 5 AI covers a powerful library of tools known as “optimization” which are designed for static, deterministic problems. This class of technologies traces its roots to the 1950s with the emergence of linear programming which is universally written:

where we need to find a vector x that minimizes costs subject to constraints that are typically written:

where we need to find a vector x that minimizes costs subject to constraints that are typically written:

Ax = b

x ≥ 0

Linear programming laid the foundation for modern optimization by setting the framework for how these models are written, with an objective function (2), and constraints (3)-(4) that capture the physics of the problem in the form of the matrix A and the constraint vector b. The objective function can be designed to minimize costs, inventories, or lives lost, or maximize profits, demand covered, and number of people who are vaccinated. What is important is that we get to choose the objective (in fact, we must choose the objective).

Note that our machine learning models (and I mean all machine learning models) lack an objective function that reflects the goals of a specific problem, or the ability to express the physics of the problem (as we do with our constraints (3)-(4)). Empowered with this information, powerful algorithms (such as the simplex algorithm for linear programs) can produce solutions that outperform what a human can do. Level 6 covers an extraordinarily rich class of problems known as sequential decision problems which all consist of the sequence:

Decision, information, decision, information, …

Each time we make a decision xt, we use information in a state variable St (think of St as every piece of information in a database) after which new information arrives that we call W(t+1) (customer demands, shipping delays, changes in market conditions) which we did not know when we made our decision xt. We evaluate our performance at each point in time with a metric C(St, xt) which we may maximize or minimize. The state variable evolves according to a state transition model that we express using:

Our state transition model SM (⋅) is where we put all the physics of the problem. State transition models can be a single equation to update inventories, or tens of thousands (even tens of millions) of equations that capture the updating of every piece of information in a database. Decisions are made with a method we call a policy which is a function that we denote Xπ (St |θ) which depends on the information in St, and often depends on parameters θ that need to be tuned. Just as we must pick a type of function f(x|θ) when we were solving machine learning problems, we also have to choose a type of function for making decisions. Then we have to tune θ just as we did for our machine learning problems. Finally, since the information sequence Wt is random (for example, we do not know sales, or prices, or weather, in advance), we must average over all possible outcomes. This objective would be written:

Our state transition model SM (⋅) is where we put all the physics of the problem. State transition models can be a single equation to update inventories, or tens of thousands (even tens of millions) of equations that capture the updating of every piece of information in a database. Decisions are made with a method we call a policy which is a function that we denote Xπ (St |θ) which depends on the information in St, and often depends on parameters θ that need to be tuned. Just as we must pick a type of function f(x|θ) when we were solving machine learning problems, we also have to choose a type of function for making decisions. Then we have to tune θ just as we did for our machine learning problems. Finally, since the information sequence Wt is random (for example, we do not know sales, or prices, or weather, in advance), we must average over all possible outcomes. This objective would be written:

where the state variable evolves according to the state transition function in (5), and where the expectation operator E is averaging over all the possible outcomes of the information in W1, W2, ..., Wt, ..., Wt (we typically approximate this by taking a sample). As with equation (1), we are not just minimizing over our tunable parameters θ – we also have to search over different types of functions to represent our policy Xπ (St | θ). See [2] for a discussion of the different classes of policies.

where the state variable evolves according to the state transition function in (5), and where the expectation operator E is averaging over all the possible outcomes of the information in W1, W2, ..., Wt, ..., Wt (we typically approximate this by taking a sample). As with equation (1), we are not just minimizing over our tunable parameters θ – we also have to search over different types of functions to represent our policy Xπ (St | θ). See [2] for a discussion of the different classes of policies.

Sequential decision problems are astonishingly rich, but they arise throughout business and supply chain management. In fact, virtually any problem requiring that someone make a decision is a sequential decision problem, from choosing facilities and suppliers, to ordering inventory, pricing, marketing, personnel planning, and equipment scheduling.

Before we move to level 7, pause and compare the machine learning problem in equation (1) and the search for the best policy in equation (6). Both require tuning a vector of parameters. However, in machine learning we can only minimize the error between our function f(x|θ) and the label y. With sequential decision problems, we get to choose the objective, and we are also required to specify all the dynamics (physics) of the problem in the state transition function (5).

Unstated in both objectives is the need to find the best type of function. For machine learning, an analyst can choose among some combination of lookup tables, parameter functions (linear or nonlinear, including neural networks), and nonparametric functions. For sequential decision problems, an analyst needs to decide what type of function (called a policy) to make decisions. Policies can be simple order-up-to functions for inventory problems, to complex strategies that plan into the future. There are four classes of policies, the first of which includes every function that might be used in machine learning (see [P] for a quick summary, or Chapter 13 in [3] for a more complete description).

Levels 5 and 6 both offer the potential to produce decisions that outperform a human, but the price of this power is that we must provide our own objective function, and we have to fully specify the characteristics of the problem. As a result, any AI models developed for levels 5 and 6 are going to be highly specialized, just as Google Maps can only solve shortest-path problems.

So what about level 7, which I have labeled “FoG AI” (for “finger of God,” a phrase from the 1996 movie Twister)? I suspect most readers familiar with artificial intelligence have read concerns about “AI” surpassing humans in intelligence, whether it is curing cancer or guiding militaries. Visions of autonomous robots initiating attacks conjure images of machines taking over the world (the video [4] provides a vivid example).

What these visions ignore is that the tools that are actually available in the form of implementable algorithms all fall in levels 1 through 6. Most references to “AI” in the press today are all based on the apparent magic of large language models (level 4) which cannot perform planning (you cannot solve a shortest path problem with a neural network), which is a necessary skill whenever planning the movement of physical resources. Planning is possible using levels 5 and 6, but these tools are limited to very specific applications (such as solving the shortest path or planning inventories) since the physics of the underlying problem has to be hard coded into the software.

So, Level 7 remains in the realm of science fiction. No amount of training will produce a neural network that can manage a fleet of robots, since this requires an understanding of the environment, and the use of explicit objectives such as killing people or destroying machines, which has nothing to do with the objective in equation (1). This is the reason that the leading AI scientist at Meta, Yan Lecun, stated ”A cat can remember, can understand the physical world, can plan complex actions, can do some level of reasoning—actually much better than the biggest LLMs.”

This does not mean that neural networks are irrelevant. The pattern-matching capabilities of neural networks are powerful tools for understanding the environment, whether this is through statistical estimation, pattern recognition (images, voice, handwriting), and natural language processing. However, while understanding the state of the world is a critical skill, it will never provide the capabilities needed to plan more complex attacks beyond “this is the enemy so shoot” (something that is now happening in Ukraine).

In time, we will divide Level 7 into distinct levels to capture future steps. Combining the capabilities of state estimation from Levels 2, 3, and 4 with the decision-making capabilities of Levels 5 and 6 represents a natural next step. However, Levels 5 and 6 require well-defined decisions, something that does not typically exist in more complex problems. In the English language, the word “idea” often means a possible decision, which could be a new product, a marketing strategy, or a logistics concept such as just-in-time manufacturing used in Toyota City. None of the first six levels can initiate an idea.

A reason why Level 7 capabilities are likely to remain the stuff of science fiction is the same reason that most of the current surge of “AI” companies developing large language models will fail: the lack of a business purpose that will cover the cost. These tools are becoming expensive, and they can only be justified if there are economic benefits. The initial wave of LLM startups have depended on the generous support of major tech companies like Microsoft, as well as the usual crowd of investors hoping to get into the market early. Most of this money will be lost, as it has in the past. Advanced technologies need noble causes to motivate their development.